Note: Updated October 24, 2013, to fix some editorial nits, and to clarify the intended point that it is the combination of a working mark/drop algorithm with flow scheduling that is the “killer” innovation, rather than the specifics of today’s fq_codel algorithm.

Latency (called “lag” by gamers), once incurred, cannot be undone, as best first explained by Stuart Cheshire in his rant: “It’s the latency, Stupid.” and more formally in “Latency and the Quest for Interactivity,” and noted recently by Stuart’s 12 year old daughter, who sent Stuart a link to one of the myriad “Lag Kills” tee shirts, coffee bugs, and other items popular among gamers.

Out of the mouth of babes…

Any unnecessary latency is too much latency.

Many networking engineers and researchers express the opinion that 100 milliseconds latency is “good enough”. If the Internet’s worst latency (under load) was 100ms, indeed, we’d be much better off than we are today (and would have space warp technology as well!). But the speed of light and human factors research easily demonstrate this opinion is badly flawed.

Many have understood bufferbloat to be a problem that primarily occurs when a saturating “elephant flow” is present on a link; testing for bufferbloat using elephants is very easy, and even a single elephant TCP flow from any modern operating system may fill any size uncontrolled buffer given time, but this is not the only problem we face. The dominant application, the World Wide Web, is anti-social to any other application on the Internet, and its collateral damage is severe.

Solving the latency problem requires a two prong attack.

Web Performance and Its DOS attack on the Internet

Most applications’ performance are much more sensitive to latency than to bandwidth. For example, web browsing is not usually regarded as a latency sensitive application, but in fact, it is very sensitive to latency!

Today’s broadband connections throughput is already past the point of diminishing returns for typical web browsing: increasing broadband will not significantly improve performance, but reducing latency can improve performance further.

Elapsed time is usually dominated by of round trips that occur during fetching a web page, including: delays for:

- DNS lookups (when not cached, usually a single RTT from client to server)

- TCP opens (the three way handshake that occurs when a connection to a server is used for the first time)

- SSL/TLS negotiation (one or two RTT’s, if the connection is secured)

- The actual HTTP request and response

Our original intent was to hide much/most of these round trip times by use of HTTP/1.1 pipelining (I was the editor of the IETF standards document); unfortunately, for various historical and technical reasons, pipe lining has not been widely deployed and there is little hope it will ever be. Our best hope for reducing the number of TCP connections used and number of RTT’s a web browser uses is, I think, SPDY. If you know nothing about SPDY, the link to a Tech Talk here, with slides here by Roberto Peon & William Chan. 1 I use a number of their graphs below to illustrate the problems the web now causes for itself, but due to FIFO queues, HTTP now inflicts great damage to other real time applications.

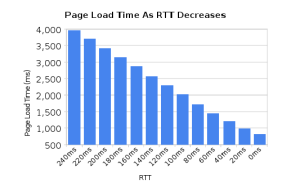

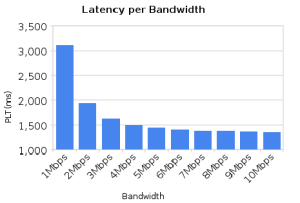

The two graphs show just how sensitive the web is to latency. As you can see, the page load time decreases essentially linearly with latency. The benefit of bandwidth improvements is much less pronounced. While page load time does improve as bandwidth goes from 1 Mb/s to 3 Mb/s, above that — bandwidths that are now commonplace with broadband, 802.11, or 4G cellular services — we are beyond the point of diminishing returns and page load times show little further improvement. The Google data shows a 14x multiplier. +200ms RTT increases page load time by +2.8 seconds. The Page load time (PLT) at 10 Mbps almost indistinguishable from 6 Mbps. To improve web performance, we must improve latency. If you study output from webpagetest.org on web sites, you can see your CPU is usually idle, and how much time is lost due to waiting for round trips.

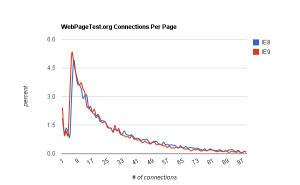

Most web sites have a tremendous number of embedded objects, as you can see from this graph. To avoid as much head of line blocking and take advantage of the much higher broadband performance available today, web clients and servers (via web site sharding) have conspired to use as many TCP connections as possible, often tens of connections at once. Web site designers often measure performance as how quickly the packets leave the data center, but the reality that they may not be improving actual elapsed time to the end user is usually ignored. The days of RFC 2616’s “two TCP connection rule” are long gone. (Section 8.1.4). And since RFC2616 was written, TCP’s initial window (IW) has been raised, first to 4 packets, and recent changes may (have already) raised that further to 10 packets. To add to this mess, some broadband equipment (under provisioned in the upstream direction) elides TCP acks, and network offload engines in servers, hosts, and even home routers, can and will transmit up to 64K bytes of packets at line rate without operating system intervention. Each of these changes may have made sense in isolation; together they spell disaster.

It is easy for there to be flights of packets that can reach more than a hundred packets in length, leaving data centers at high speed, cross the Internet, and arrive at the bottleneck link(s), usually your broadband, cellular or WiFi links.

Just as HTTP often suffers from “head of line blocking” in its protocol design, any FIFO (First In, First Out) network queues in your operating system and/or network devices impose head of line blocking in the network path at bottleneck links, incurring latency both to the web browser itself and to other applications sharing that bottleneck. As you will see below, particularly at the edge of the network, single FIFO queues must be replaced by something less stupid; fortunately such algorithms have existed for a long time (and we often even use them in airports!). Our network needs similar intelligence.

In a web transaction, the base web page is downloaded and parsed. Immediately thereafter, the web browser then fetches all the embedded objects, which as the above graph shows, are many. Since most web objects are small, many/most of them never go much beyond the TCP IW, much less complete slow start in a bloated connection. The web has effectively defeated TCP congestion avoidance. Now we’ll do some simple math on what happens next, using two examples:

- If a web browser now opens only 10 TCP connections/page, (which it will do easily since web site sharding means the browser’s limits on # connections are being defeated), this means that (after the initial DNS lookups, TCP opens, and possible SSL negotiations take place), 40 packets will be in flight from your web site to your web browsers (the IW * # of connections; most images are big enough to fill 4 packets), and start queuing at the bottleneck link. In this example, your bottleneck is your WiFi router; you have an 802.11g router running at full speed since it is nearby (which is about 20Mbps throughput at best). Since the router’s queue is a FIFO queue, no other application will share that link for about 25 milliseconds. Not so bad, eh? Now think about what happens on a busy 802.11 network (for example, at a conference or in a hotel), or where you are farther away from the router. Your throughput may be only 1Mbps. The latency incurred on anything else sharing the link will be 500ms! In fact, you probably have had HOL blocking taking place in two places in this example: first at your broadband connection, which is a bloated FIFO queue, and then again at your home router.

- You visit a really image heavy web site (of which there are many, as seen in the graph above), heavily sharded with relatively larger images, using IW10. The Huffington Post is a good poster child for such a dismal web site (though it has many other problems too!). You can easily have hundreds of packets in flight at once, incurring corresponding latency. I have observed up to several hundred milliseconds of transient latency on a 50Mbps cable connection on certain web sites! Just increasing bandwidth is not going to get us the latency we need, as I’ll demonstrate in the next section.

I keep the number of 13ms/1500 byte packet@1Mbps in my head for doing this arithmetic. In short, web surfing is putting impulses of packets, without congestion avoidance, into FIFO queues where they do severe collateral damage to anything sharing the link (including itself!).

So today’s web behavior incurs huge collateral damage on itself, data centers, the edge of the network, and in particular any application that hopes to have real time behavior. How do we solve this problem? There is no real way back to the Internet of the 1980’s, and fundamentally, the problem is not soluble solely in the hosts on the Internet; the network itself must perform better adjudication among users and applications.

Real Time Applications

Other applications have differing latency requirements, usually set by human perception; to remind those of us who have not worked in the area of human factors, some numbers I keep in mind include:

- For traditional quality VOIP (or teleconferencing), the acceptable latency is no more than approximately 150ms. This rule of thumb was set many decades ago in the conventional POTS phone network, acknowledging the speed of light on transcontinental paths; but lower is always better.

- For keyboard key echo to appear “instant,” the acceptable latency is around 50ms.

- For “rubber banding” to “feel solidly attached” to your hand, your desirable latency is less than 30ms, or it feels as if your feedback sprite is literally connected by a “rubber band”.

- Professional musicians playing together may be able to tolerate (but not enjoy) up to 100ms of latency. But for duffers to play music together successfully, the latency may only be 20-40ms.

- You can regard 20-30ms as the point at which most things appear instant to us humans.

- Serious gamers (or day traders; is there a difference?) care about differences of even a single milliseconds of latency, as they are competing with others and it affects the success of their competition, and strive for under 15ms latency total. Wall Street installs special purpose networks to shave milliseconds (or microseconds) off of transactions.

We have latency “costs” we must budget for: it is unacceptable for the network to use all available time allowing no time for anything else in the end to end system. System performance == network delays + all other delays.

These “other” delays include:

- Cameras and other input devices (mice, touch pads, etc.) also typically insert latency between the user and the application, often in the 10 or more millisecond range. At 60hz, this is an additional 16ms.

- Device driver rings for audio and video; there is latency driving multimedia hardware.

- Packetizing data incurs latency; a VOIP stream sends of order 30-100 frames/second. This incurs a delay of 10-30ms.

- Encoding (in particular) and decoding audio and video packets requires time and operate over blocks of samples or pixels.

- Operating system scheduler latency (operating system and load dependent)

- Mixing audio & video requires time.

- Access to the medium: both wireless and broadband technologies often have built in latency to access their shared medium, measured in milliseconds. In your home, you may have two such access latencies: WiFi and broadband. These access latencies go up when the medium is loaded. I measure 230 ms today on my hspa+ cellular service, and 11ms to MIT from home (40km + 9ms for cable + around 1ms for 802.11 latency in my radio quiet location).

- At long last, screen refresh is beginning to increase on desktop displays: but 60hz is commonplace on laptops to conserve power, which is about 17ms. Updated frames display are usually delayed until the next “vertical retrace” using buffer swaps to avoid annoying frame “tearing”.

- The packet requires time to be transmitted. A 1500 byte packet takes about 13ms to transmit @ 1Mbps, which may be your share of a busy WiFi network, or all you have on your DSL uplink. Note that this is the only point where higher bandwidth really helps your latency, and is often not significant relative to other sources of latency.

- And most insidious today: head of line blocking in “stupid bloated FIFO queues” in our operating systems, and worst yet, all through the path from end to end.

- The lower bound on latency speed of light latency is set by the speed of propagation in the medium and the throughput in that medium: in today’s Internet, a trans-continental link across the North America has an inherent latency of approximately 75ms, presuming good routing in the Internet, which is often not available. Speed in glass is about .6C. Latency is so critical to some applications (of dubious social value: e.g. high frequency trading) that we’ve seen the reemergence of microwave based networks to reduce latency below that possible with fiber based networks.

Latency must be regarded as a “budget”, and you spend down the tolerable latency at each “hop”. Sometimes you can hide latency behind other operations, but sometimes not. With careful engineering (often not present in today’s systems on “modularity” grounds), some of these delays could be hidden by “cut-through” or other techniques but in practice, all of these delays are typically incurred serially. You can never get spent latency back.

Given the speed of light is something we find it difficult to change, and impossible to change even by a factor of two, this means that for most applications, adding *any* additional latency reduces the quality of the user’s experience.

Latency variance (jitter) is also a key metric: if your latency spikes occasionally, applications that cannot tolerate these spikes are forced to operate at “worst case” observed latency over a long time period. Such spikes may be many times worse than “steady state” latency. Controlling latency to yourself and others sharing your bottleneck links even during latency spikes caused by web surfing is necessary.

You should view total latency (network + system + jitter) as a radius over which applications will be perceived as working fast and well.

You can see that even an obvious acheivable a goal of enabling people to play music together reliably in a metropolitan area requires care throughout the system, including the network stack. Even a single full sized packet matters, much less a flight of ten or 100 packets. In the quest for throughput, we’ve been ignoring latency for decades: but consistent low latency is inherently harder to achieve.

Packet Classification

The “traditional” solution to our latency problem has been by packet classification; it has been our only hammer. But there are a number of fundamental issues with traditional classification. These include:

Complexity: The QOS page on your home router is too complicated for most people (including networking engineers) to understand, much less your grandmother. Requiring users to fiddle with such configuration on home routers (and in their operating systems!!!) to make things work under unpredictable load is fundamentally an engineering failure and inhibits deployment of new technology. Yet that is what is now required.

Lack of control: Even if you can handle the complexity, one of the key queuing points is not under your control: it is at the head end of your broadband (or cellular) connections, where you have no control. Who sets the policy? Is it for pay? Who pays whom? This is at the heart of the network neutrality debate, which bufferbloat triggered in the first place. Today, we have the worst of all possible worlds: your Internet service has one bloated FIFO queue (if you buy telephony from your ISP, you get a second queue that only that traffic has access to, but competing services such as Skype or Vonage do not).

Packets are not created equal: The first packets in a new flow, or the first packets in a idle flow are usually much more valuable than later packets in terms of latency to applications, and should be scheduled for transmission ahead of routine bulk data packets. Applications cannot make further progress until they arrive. Traditional QOS ignores these factors. Here are examples that make this observation clear:

- TCP opens

- The SSL handshakes

- DNS lookups

- DHCP, RA and other housekeeping packets account for little of the bandwidth, but are essential to the correct operation of the network.

- Isochronous flows such as VOIP or other real time traffic are occasional packets separated by idle periods.

- The first few bytes of each embedded images on web pages, which have the image size information required for a browser to lay out a web page. To avoid annoying reflows on the screen, browsers need the size of all embedded images.

Bulk data transfer packets are much less sensitive to time; those later packets being delayed do not cost the same “human” time. Traditional packet classification is by protocol type. This information isn’t really useful in general; people tunnel all sorts of traffic over HTTP and while diffserv marking could be used, it is not widely used in applications, and can easily be gamed (it can be very useful in networks you control, however). The net result of trying to use classification to achieve better latency are complex, fragile, inscrutable, QOS rules that only someone who has won his complexity merit badge can love.

Hardware queues have limited value: having a limited number of hardware based queues does not solve the problem. To begin with, we’ve demonstrated here how HOL blocking damages “best effort” TCP traffic and the people using them. The hardware queues (e.g. 802.11e) may not even work correctly (as Dave Täht has been discovering in WiFi). And in many network technologies, the scarcest resource when loaded may be “transmit opportunities”. Your most timely packet scheduling for high priority traffic (however you decide to define high priority) will be to use the first transmit opportunity, aggregating lower priority traffic into the same aggregate when possible. You really don’t want to burn two transmit opportunities if a single transmit opportunity will do. Smart software packet scheduling can do much better and today’s processors are more than fast enough to cope.

Does traditional classification have value?

Yes, classification allows you to make absolute guarantees that smart queuing may not be able to make. It is a an important tool in our toolkit, but not the only possible tool.

For example, you may want to guarantee that guests can never get more than 10% of your network when busy for with your traffic. But traditional classification to solve all our latency problems is a dead end; it does not touch latency cause by HOL blocking and unmanaged buffering. Let’s attack the fundamental problem, by isolating and scheduling applications (and people and customers) between each other, and then classification becomes much, much less necessary.

Bloated Stupid FIFO Queues Must Die

Today’s use of TCP is radically different from when (W)RED and similar algorithms were developed: TCP’s initial window was but one or two packets (versus today’s 4 packets, on its way to 10 packets). In the 1990’s, most web browsers and web servers used but two TCP connections between them. Memory is cheap, processors are fast, and web servers seldom go to disk for anything.

Given the Mlabs and netalyzr data, we can easily understand that a substantial fraction of the broadband edge has so much buffering that there has been little observed packet loss and little to push back on web site developers to avoid this pathology. And when packet drops do occur, there is no discrimination as to which packets are most “expensive” to drop.

But these packet bursts fill FIFO buffers in either your broadband equipment or in your home router, blocking any other application (or even the application itself) from timely transmission to your screen or audio equipment. Most of the benefits of packet switching have, in fact, been lost; statistical multiplexing is no longer occurring on a fine time scale.

A single 1500 byte packet represents around 13ms @ 1Mbps; it doesn’t take many packets to have a profound latency effect on anything sharing many bottleneck links. We must be able to isolate one flow from another, so that a new flow does not suffer intermittent catastrophic latency spikes. We must be smart about scheduling each packet: FIFO’s cannot suffice. We must schedule packets on a much more “smart” basis, usually TCP flows, but sometimes other factors (beyond the scope of this posting).

If FIFO’s are hopeless even with QOS on multiple queues, what then?

Signalling TCP

As I explained before, we cannot predict “correct” amounts of buffering; static buffer allocation is hopeless; buffers must be managed.

“Traditional” AQM algorithms only attack part of the problem: that of TCP filling buffers in steady state, but do not address the head of line blocking problems caused by today’s application and operating system behavior (including the web), as the Internet has effectively been gamed.

If you do have an elephant flow (such as a upload or download of a big file) over a bloated bottleneck, without some congestion signalling mechanism, TCP will not back off in a timely fashion and share that link; what is worse, the sharing behavior of TCP is quadratic in the RTT, rather than linear. So policing TCP is necessary, since TCP cannot share bottleneck links in a timely fashion, nor can TCP based applications respond quickly in the face of such excessive buffering (a common example may be a “streaming” video player application, which will have difficulty figuring out the bandwidth it can use). Buffering can also be so large that TCP itself can malfunction, thinking that the path may have changed entirely, as seen in my first traces. An algorithm that signals TCP in a timely fashion via packet drop or ECN is necessary, even if not sufficient. Drop tail FIFO queues are a fundamentally bad idea.

Existing algorithms such as (W)RED for policing TCP are inadequate as that class of algorithm requires careful tuning, which, in practice, has caused these algorithms to often be unused, but since that class of algorithm cannot be tuned in the face of the highly (and almost instantaneous) variable throughput found at the edge of today’s Internet it is hopeless to continue with them. Only a trustworthy, rapidly adaptive mark/drop algorithm requiring no tuning can suffice; and as you’ll see in the next section, we need a mark/drop algorithm that plays well with flow queuing, which (W)RED does not. CoDel (developed by Kathleen Nichols and Van Jacobson) is the first of a new class of mark/drop algorithms; more will be invented with time, no doubt.

Flow Queuing

Since applications (such as the web) can and have gamed the Internet to defeat congestion avoidance, we have to be able to identify and isolate such abusive flows, and ensure that packets that are most latency sensitive are scheduled first. There are a large number of algorithms that were developed in the 1980’s and 1990’s which identify flows, often called “fair” queuing algorithms. Those algorithms were widely explored in research, but in that era, the computational cost of implementing them (e.g. DRR, itself based on SFQ) were considered prohibitive. Our computer systems have radically changed since then, and many of these algorithms are now be blazing fast on today’s systems, with the computational overhead hidden (and therefore “free”) behind memory accesses.

Fairness is in the eye of the beholder, and being TCP fair is not what is called for here either. But we have to start by identifying network flows and scheduling for transmission high priority packets in flows first. As noted above, the first packet(s) in a flow (or packets in a flow that is not building a queue) are the most important to minimize latency. And today’s TCP’s do not like disordered packets, so we need to keep the packets ordered among a given TCP flow.

Putting the Pieces Together

Combining flow scheduling along with signaling TCP is therefore not only “nice to have”, but essential. This is why we are so excited about the fq_codel algorithm developed by Eric Dumazet, inspired by previous work by Dave Täht, which combines flow queuing with CoDel (developed by Kathleen Nichols and Van Jacobson), and scales easily to any bandwidth we see in the edge of the Internet even in software only implementations, to tens of gigabits/second. We believe it is the first of a new class of algorithms, but we also hope, far from the last.

A simple description of fq_codel’s behavior is:

Flows are identified. The first packet(s) from new flows are scheduled before packets from flows that have built a queue. If a flow’s queue empties, it is eligible to again be considered a new flow. CoDel is applied to control the queue length in any flow. Result: timely delivery, and mixing of flows.

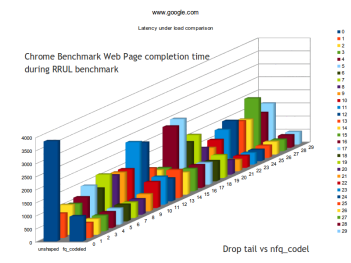

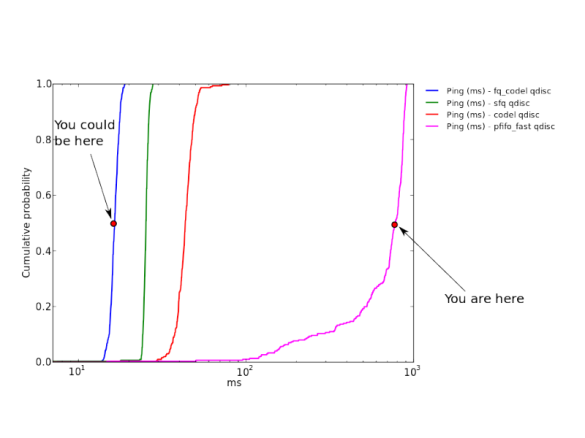

Essentially, fq_codel combines DRR and CoDel with the twist that packets from flows that never build queues are scheduled preferentially to those which do build a queue. Your DNS lookups, DHCP, RA, TCP opens, SSL hand-shakes, first packet of an image in a web page, and VOIP and other small intermittent real time traffic are preferred to bulk data transfers, without requiring any explicit classification. Other variations on this theme are certainly possible: but both controlling TCP with a responsive auto-adjusting drop/mark policy and flow queuing are necessary in today’s Internet, and result in radically better latency under all tested loads, and hugely improves web browsing under load (using the RRUL test), as shown here:

“Fq_codel provides great isolation… If you’ve got low-rate videoconferencing and low rate web traffic they never get dropped. A lot of issues with IW10 go away, because all the other traffic sees is the front of the queue. You don’t know how big its window is, but you don’t care because you are not affected by it. ”

“Fq_codel increases utilization across your entire networking fabric, especially for bidirectional traffic…”

“If we’re sticking code into boxes to deploy CoDel, don’t do that. Deploy fq_codel. It’s just an across the board win.”

After these and other results were presented in the IETF ICCRG meeting, Matt Mathis noted at the microphone that the results are so dramatically better than anything we’ve previously had that it was time to deploy this technology. While further improvements in mark/drop and flow queuing algorithms are possible and even desirable, they will be necessarily small relative to the gains shown here, and we have a serious problem that needs to be fixed immediately. Deployment should start immediately; polishing the apple further should not be allowed to delay deployment, and stupid, unmanaged FIFO queues must die!

July 13, 2013 at 11:47 am |

Are the guys working on the -RT kernel staying abreast of these changes, and making sure they play nicely with the -RT kernel? Seems that there are some applications (I’m thinking of some of the new IP based professional audio transports specifically) that may benefit from this. I think for the most part those multimedia protocols in professional applications are using vlans and managed networks to forcibly set up priorities on a fairly limited network environment, but I imagine as people try to scale those to larger installations (think multi-room production studios or stadium audio management systems) they will start hitting bufferbloat problems within the switches. Before getting there it would be nice to make sure that nothing in the implementation being worked causes problems for the RT kernels.

July 27, 2013 at 3:27 pm |

Switches have become pretty horribly overbuffered in general. I USED to pay attention to network audio a lot, but gave up in the post wireless-n era. Vlan-users have a terrible tendency to presume they own their portion of the wire, when it still is one wire. I really don’t have a good answer for that besides what we’ve come up with so far.

As for your rt-kernel question, I don’t know, most of the rt-kernel features I cared about made it into the mainline. The only one that hasn’t is interrupt threading, which would be good, but there are structural problems with any general purpose OS in tuning it for any particular application.

We DO have a problem on hosts in that most developers are (rightly) optimizing for cpu usage as their target, and are ignoring (somewhat wrongly), the effects on network behavior. Their goal is to get as much “work” done inside a 10ms window – where that work is a variety of tasks, when networks are operating on a time scale much lower than that.

The “smart” hardware that has sprouted, moving offloads, and other significant hunks of the network stack into hardware is rather brittle – and sadly, most low-end cpus cannot drive a gigE link to full throttle without them. So we’re in a bind.

December 31, 2016 at 4:39 pm |

[…] excellent account of this is given by Jim Getty’s from 2013 “Traditional AQM is not enough” (i.e., AQM: Active Queue Management). Data (see Jim Getty’s blog) strongly indicates that at a […]