- George Herbert, Outlandish Proverbs, 1640

![]() In my last post I outlined the general bufferbloat problem. This post attempts to explain what is going on, and how I started on this investigation, which resulted in (re)discovering that the Internet’s broadband connections are fundamentally broken (others have been there before me). It is very likely that your broadband connection is badly broken as well; as is your home router; and even your home computer. And there are things you can do immediately to mitigate the brokenness in part, which will cause applications such as VOIP, Skype and gaming to work much, much better, that I’ll cover in more depth very soon. Coming also soon, how this affects the world wide dialog around “network neutrality.”

In my last post I outlined the general bufferbloat problem. This post attempts to explain what is going on, and how I started on this investigation, which resulted in (re)discovering that the Internet’s broadband connections are fundamentally broken (others have been there before me). It is very likely that your broadband connection is badly broken as well; as is your home router; and even your home computer. And there are things you can do immediately to mitigate the brokenness in part, which will cause applications such as VOIP, Skype and gaming to work much, much better, that I’ll cover in more depth very soon. Coming also soon, how this affects the world wide dialog around “network neutrality.”

Bufferbloat is present in all of the broadband technologies, cable, DSL and FIOS alike. And bufferbloat is present in other parts in the Internet as well.

As may be clear from old posts here, I’ve had lots of network trouble at my home, made particularly hard to diagnose due to repetitive lightning problems. This has caused me to buy new (and newer) equipment over the last five years (and experience the fact that bufferbloat has been getting worse in all its glory). It also means that I can’t definitively answer all questions about my previous problems, as almost all of that equipment is scrap.

Debugging my network

As covered in my first puzzle piece I was investigating performance of an old VPN device Bell Labs had built last April, and found that the latency and jitter when running at full speed was completely unusable, for reasons I did not understand, but had to understand for my project to succeed. The plot thickened when I discovered I had the same terrible behavior without using the Blue Box.

I had had an overnight trip to the ICU in February; so did not immediately investigate then as I was catching up on other work. But I knew I had to dig into it, if only to make good teleconferencing viable for me personally. In early June, lightning struck again (yes, it really does strike in the same place many times). Maybe someone was trying to get my attention on this problem. Who knows? I did not get back to chasing my network problem until sometime in late June, after partially recovering my home network, further protecting my house, fighting with Comcast to get my cable entrance relocated (the mom-and-pop cable company Comcast had bought had installed it far away from the power and phone entrance), and replacing my washer, pool pump, network gear, and irrigation system.

But the clear signature of the criminal I had seen on April had faded. Despite several weeks of periodic attempts, including using the wonderful tool smokeping to monitor my home network, and installing it in Bell Labs, I couldn’t nail down what I had seen again.I could get whiffs of smoke of the the unknown criminal, but not the same obvious problems I had seen in April. This was puzzling indeed; the biggest single change in my home network had been replacing the old blown cable modem provided by Comcast with a new faster DOCSIS 3 Motorola SB6120 I bought myself.

In late June, my best hypothesis was that there might be something funny going on with Comcast’s PowerBoost® feature. I wondered how that worked, did some Googling, and happened across the very nice internet draft that describes how Comcast runs and provisions its network. When going through the draft, I happened to notice that one of the authors lives in an adjacent town, and emailed him, suggesting lunch and a wide ranging discussion around QOS, Diffserv, and the funny problems I was seeing. He’s a very senior technologist in Comcast. We got together in mid-July for a very wide ranging lunch lasting three hours.

Lunch with Comcast

Before we go any further…

Given all the Comcast bashing currently going on, I want to make sure my readers understand through all of this Comcast has been extremely helpful and professional, and that the problem I uncovered, as you will see before the end of this blog entry, are not limited to Comcast’s network: bufferbloat is present in all of the broadband technologies, cable, FIOS and DSL alike.

The Comcast technical people are as happy as the rest of us that they now have proof of bufferbloat and can work on fixing it, and I’m sure Comcast’s business people are happy that they are in a boat the other broadband technologies are in (much as we all wish the mistake was only in one technology or network, it’s unfortunately very commonplace, and possibly universal). And as I’ve seen the problem in all three common operating systems, in all current broadband technologies, and many other places, there is a lot of glasse around us. Care with stones is therefore strongly advised.

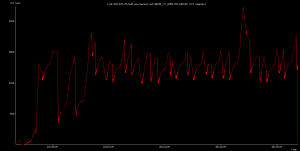

The morning we had lunch, I happened to start transferring the old X Consortium archives from my house to an X.org system at MIT (only 9ms away from my house; most of the delay is in the cable modem/CMTS pair); these archives are 20GB or so in size. All of a sudden, the wiffs of smoke I had been smelling became overpowering to the point of choking and death. “The Internet is Slow Today, Daddy” echoed through my mind; but this was self inflicted pain. But as I only had an hour before lunch, the discussion was a bit less definite than it would have been even a day later. Here is the “smoking gun” of the following day, courtesy of DSL Reports Smokeping installation. You too can easily use this wonderful tool to monitor the behavior of your home network from the outside.

As you can see, I had well over one second latency, and jitter just as bad, along with high ICMP packet poss. Behavior from inside out looked essentially identical. The times when my network connection returned to normal were when I would get sick of how painful it was to browse the web and suspend the rsync to MIT. As to why the smoke broke out, the upstream transfer is always limited by the local broadband connection: the server is at MIT’s colo center on a gigabit network, that directly peers with Comcast. It is a gigabit (at least) from Comcast’s CMTS all the way to that server (and from my observations, Comcast runs a really clean network in the Boston area. It’s the last mile that is the killer.

As you can see, I had well over one second latency, and jitter just as bad, along with high ICMP packet poss. Behavior from inside out looked essentially identical. The times when my network connection returned to normal were when I would get sick of how painful it was to browse the web and suspend the rsync to MIT. As to why the smoke broke out, the upstream transfer is always limited by the local broadband connection: the server is at MIT’s colo center on a gigabit network, that directly peers with Comcast. It is a gigabit (at least) from Comcast’s CMTS all the way to that server (and from my observations, Comcast runs a really clean network in the Boston area. It’s the last mile that is the killer.

As part of lunch, I was handed a bunch of puzzle pieces that I assembling over the following couple months. These included:

- That what I was seeing was more likely excessive buffering in the cable system, in particular, in cable modems. Comcast has been trying to get definitive proof of this problem since Dave Clark at MIT had brought this problem to their attention several years ago.

- A suggestion of how to rule in/out the possibility of problems from Comcast’s Powerboost by falling back to the older DOCSIS 2 modem.

- A pointer to ICSI’s Netalyzr.

- The interesting information that some/many ISP’s do not run any queue management (e.g. RED).

I went home, and started investigating seriously. It was clearly time to do packet traces to understand the problem. I set up to take data, and eliminated my home network entirely by plugging my laptop directly into the cable modem.

But it had been more than a decade since I last tried taking packet captures, and was staring at TCP traces. Wireshark was immediately a big step up (I’d occasionally played with it over the last decade); as soon as I took my first capture I immediately knew something was gravely wrong despite being very rusty at staring at traces. In particular, there were periodic bursts of illness, with bursts of dup’ed acks, retransmissions, and reordering. I’d never seen TCP behave in such a bursty way (for long transfers). So I really wanted to see visually what was going on in more detail. After wasting my time investigating more modern tools, I settled on the old standby’s of tcptrace and xplot I had used long before. There are certainly more modern tools; but most are closed source and require Microsoft Windows. Acquiring the tools, and their learning curve (and the fact I normally run Linux) mitigated against their use.

A number of plots show the results. The RTT becomes very large after a while (10-20 seconds) into the connection, just as the ICMP ping results go.. The outstanding data graph and throughput graph show the bursty behavior so obvious even browsing the wireshark results. Contrast this with the sample RTT, outstanding data graph and throughput graphs from the TCP trace manual.

Also remember that buffering in one direction still causes problems in the other direction; TCP’s ack packets will be delayed. So my occasional uploads (in concert with the buffering) was causing the “Daddy, the Internet is slow today” phenomena; the opposite situation is of course also possible.

The Plot Thickens Further

Shortly after verifying my results on cable, I went to New Jersey (I work for Bell Labs from home, reporting to Murray Hill), where I stay with my in-laws in Summit. I did a further set of experiments. When I did, I was monumentally confused (for a day), as I could not reproduce the strong latency/jitter signature (approaching 1 second of latency and jitter) that I saw my first day there when I went to take the traces. With a bit of relief, I realized that the difference was that I had initially been running wireless, and then had plugged into the router’s ethernet switch (which has about 100ms of buffering) to take my traces. The only explanation that made sense to me was that the wireless hop had additional buffering (almost a second’s worth) above and beyond that present in the FIOS connection itself. This sparked my later investigation of routers (along with occasionally seeing terrible latency in other routers), which in turn (when the results were not as I had naively expected, sparked investigating base operating systems.

The wireless traces are much rattier in Summit: there are occasional packet drops severe enough to cause TCP to do full restarts (rather than just fast retransmits), and I did not have the admin password on the router to shut out other access by others in the family. But the general shape in both are similar to that I initially saw at home.

Ironically, I have realized that you don’t see the full glory of TCP RTT confusion caused by buffering if you have a bad connection as it reset TCP’s timers and RTT estimation; packet loss is always considered possible congestion. This is a situation where the “cleaner” the network is, the more trouble you’ll get from bufferbloat. The cleaner the network, the worse it will behave. And I’d done so much work to make my cable as clean as possible…

At this point, I realized what I had stumbled into was serious and possibly widespread; but how widespread?

Calling the consulting detectives

At this point, I worried that we (all of us) are in trouble, and asked a number of others to help me understand my results, ensure their correctness, and get some guidance on how to proceed. These included Dave Clark, Vint Cerf, Vern Paxson, Van Jacobson, Dave Reed, Dick Sites and others. They helped with the diagnosis from the traces I had taken, and confirmed the cause. Additionally, Van notes that there is timestamp data present in the packet traces I took (since both ends were running Linux) that can be used to locate where in the path the buffering is occurring (though my pings are also very easy to use, they may not be necessary by real TCP wizards, which I am not, and begs a question of accuracy if the nodes being probed are loaded).

Dave Reed was shouted down and ignored over a year ago when he reported bufferbloat in 3G networks (I’ll describe this problem in a later blog post; it is an aggregate behavior caused by bufferbloat). With examples in broadband and suspicions of problems in home routers I now had reason to believe I was seeing a general mistake that (nearly) everyone is making repeatedly. I was concerned to build a strong case that the problem was large and widespread so that everyone would start to systematically search for bufferbloat. I have spent some of the intervening several months documenting and discovering additional instances of bufferbloat, as my switch, home router, results from browser experiments, and additional cases such as corporate and other networks as future blog entries will make clear.

ICSI Netalyzr

One of the puzzle pieces handed me by Comcast was a pointer to Netalyzr.

ICSI has built the wonderful Netalyzr tool, which you can use to help diagnose many problems in your ISP’s network. I recommend it very highly. Other really useful network diagnosis tools can be found at M-Lab and you should investigate both; some of the tests can be run immediately from a browser (e.g. netalyzr), but some tests are very difficult to implement in Java. And by using these tools, you will also be helping researchers investigate problems in the Internet, and you may be able to discover and expose mis-behavior of many ISP’s. I have, for example, discovered that the network service provided on the Acela Express is running a DNS server which is vulnerable to man-in-the-middle attacks due to lack of port randomization, and therefore will never consider doing anything on it that requires serious security.

At about the same time as I was beginning to chase my network problem, the first netalyzer results were published at NANOG; more recent results have since been published. Netalyzr: Illuminating The Edge Network, by Christian Kreibich, Nicholas Weaver, Boris Nechaev, and Vern Paxson. This paper has a wealth of data in it on all sorts of problems that Netalyzr has uncovered; excessive buffering is caused in section 5.2. The scatterplot there and the discussion is worth reading. Courtesy of the ICSI group, they have sent me a color version of that scatterplot that makes the technology situation much clearer (along with the magnitude of the buffering) which they have used in their presentations, but is not in that paper. Without this data, I would have still been wondering bufferbloat was widespread, and whether it was present in different technologies or not. My thanks to them for permission to post these scatter plots.

As outlined in the Netalyzr paper in section 5.2, the structure you see is very useful to see what buffer sizes and provisioned bandwidths are common. The diagonal lines indicate the latency (in seconds!) caused by the buffering. Both wired and wireless Netalyzer data are mixed in the above plots. The structure shows common buffer sizes, that are sometimes as large as a megabyte. Note that there are times that Netalyzr may have been under-detecting and/or underreporting the buffering, particularly on faster links; the Netalyzr group have been improving its buffer test.

I do have one additional caution, however: do not regard the bufferbloat problem as limited to interference cause by uploads. Certainly more bandwidth makes the problem smaller (for the same size buffers); the wired performance of my FIOS data is much better than what I observe for Comcast cable when plugged directly into the home router’s switch. But since the problem is present in the wireless routers often provided by those network operators, the typical latency/jitter results for the user may in fact be similar, even though the bottleneck may be in the home router’s wireless routing rather than the broadband connection. Anytime the downlink bandwidth exceeds the “goodput” of the wireless link that most users are now connected by, the user will suffer from bufferbloat in the downstream direction in the home router (typically provided by Verizon) as well as upstream (in the broadband gear) on cable and DSL. I see downstream bufferbloat commonly on my Comcast service too, now that I’ve upgraded to 50/10 service, now that it is much more common my wireless bandwidth is less than the broadband bandwidth.

Discarding various alternate hypotheses

You may remember that I started this investigation with a hypothesis that Comcast’s Powerboost might be at fault. This hypothesis was discarded by dropping my cable service back to using DOCSIS 2 (which would have changed the signature in a different way when I did).

Secondly, those who have waded through this blog will have noted that I have had many reasons not to trust the cable to my house, due to mis-reinstallation of a failed cable by Comcast earlier, when I moved in. However, the lightning events I have had meant that the cable to my house was relocated this summer, and a Comcast technician had been to my house and verified the signal strength, noise and quality at my house. Furthermore, Comcast verified my cable at the CMTS end; there Comcast saw a small amount of noise (also evident in (some of) the packet traces by occasional packet loss) due to the TV cable also being plugged in (the previous owner of my house loved TV, and the TV cabling wanders all over the house). For later datasets, I eliminated this source of noise, and the cable tested clean at the Comcast end and the loss is gone in subsequent traces. This cable is therefore as good as it gets outside a lab and very low loss. You can consider some of these traces close to lab quality. Comcast has since confirmed my results in their lab.

Another objection I’ve heard is that ICMP ping is not “reliable”. This may be true if pinging a particular node when loaded, as it may be handled on a node’s slow path. However, it’s clear the major packet loss is actual packet loss (as is clear from the TCP traces). I personally think much of the “lore” that I’ve heard about ICMP is incorrect and/or a symptom of the bufferbloat problem. I’ve also worked with the author of httping, so that there is a commonly available tool (Linux and Android) for doing RTT measurements that is indistinguishable from HTTP traffic (because it is HTTP traffic!), by adding support for persistent connections. In all the tests I’ve made, the results for ICMP ping match that of httping. But TCP shows the same RTT problems that ICMP or httping does in any case.

What’s happening here?

I’m not a TCP expert; if you are a TCP expert, and if I’ve misstated or missed something, do let me know. Go grab your own data (it’s easy; just an scp to a well provisioned server, while running ping), or you can look at my data.

The buffers are confusing TCP’s RTT estimator; the delay caused by the buffers is many times the actual RTT on the path. Remember, TCP is a servo system, which is constantly trying to “fill” the pipe. So by not signalling congestion in a timely fashion, there is *no possible way* that TCP’s algorithms can possibly determine the correct bandwidth it can send data at (it needs to compute the delay/bandwidth product, and the delay becomes hideously large). TCP increasingly sends data a bit faster (the usual slow start rules apply), reestimates the RTT from that, and sends data faster. Of course, this means that even in slow start, TCP ends up trying to run too fast. Therefore the buffers fill (and the latency rises). Note the actual RTT on the path of this trace is 10 milliseconds; TCP’s RTT estimator is mislead by more than a factor of 100. It takes 10-20 seconds for TCP to get completely confused by the buffering in my modem; but there is no way back.

Remember, timely packet loss to signal congestion is absolutely normal; without it, TCP cannot possibly figure out the correct bandwidth.

Eventually, packet loss occurs; TCP tries to back off. so a little bit of buffer reappears, but it then exceeds the bottleneck bandwidth again very soon. Wash, Rinse, Repeat… High latency with high jitter, with the periodic behavior you see. This is a recipe for terrible interactive application performance. And it’s probable that the device is doing tail drop; head drop would be better.

There is significant packet loss as a result of “lying” to TCP. In the traces I’ve examined using The TCP STatistic and Analysis Tool (tstat) I see 1-3% packet loss. This is a much higher packet loss rate than a “normal” TCP should be generating. So in the misguided idea that dropping data is “bad”, we’ve now managed to build a network that both is lossier and exhibiting more than 100 times the latency it should. Even more fun is that the losses are in “bursts.” I hypothesis that this accounts for the occasional DNS lookup failures I see on loaded connections.

By inserting such egregiously large buffers into the network, we have destroyed TCP’s congestion avoidance algorithms. TCP is used as a “touchstone” of congestion avoiding protocols: in general, there is very strong pushback against any protocol which is less conservative than TCP. This is really serious, as future blog entries will amplify. I personally have scars on my back (on my career, anyway), partially induced by the NSFnet congestion collapse of the 1980’s. And there is nothing unique here to TCP; any other congestion avoiding protocol will certainly suffer.

Again, by inserting big buffers into the network, we have violated the design presumption of all Internet congestion avoiding protocols: that the network will drop packets in a timely fashion.

Any time you have a large data transfer to or from a well provisioned server, you will have trouble. This includes file copies, backup programs, video downloads, and video uploads. Or a generally congested link (such at a hotel) will suffer. Or if you have multiple streaming video sessions going over the same link, in excess of the available bandwidth. Or running current bittorrent to download your ISO’s for Linux. Or google chrome uploading a crash to Google’s server (as I found out one evening). I’m sure you can think of many others. Of course, to make this “interesting”, as in the Chinese curse, the problem will therefore come and go mysteriously, as you happen to change your activity (or things you aren’t even aware of happen in the background).

If you’ve wondered why most VOIP and Skype have been flakey, stop wondering. Even though they are UDP based applications, it’s almost impossible to make them work reliably over such links with such high latency and jitter. And since there is no traffic classification going on in broadband gear (or other generic Internet service), you just can’t win. At best, you can (greatly) improve the situation at the home router, as we’ll see in a future installment. Also note that broadband carriers may very well have provisioned their telephone service independently of their data service, so don’t jump to the conclusion that therefore their telephone service won’t be reliable.

Why hasn’t bufferbloat been diagnosed sooner?

Well, it has been (mis)diagnosed multiple times before; but the full breadth of the problem I believe has been missed.

The individual cases have often been noticed, as Dave Clark did on his personal DSLAM, or as noted in the Linux Advanced Routing & Traffic Control HOWTO. (Bert Huber attributed much more blame to the ISP’s than is justified: the blame should primarily be borne by the equipment manufacturers, and Bert et. al. should have made a fuss in the IETF over what they were seeing.)

As to specific reasons why, these include (but are not limited to):

- We’re all frogs in heating water; the water has been getting hotter gradually as the buffers grow in subsequent generations of hardware, and memory has become cheaper. We’ve been forgetting what the Internet *should* feel like for interactive applications. Us old guy’s memory is fading of how well the Internet worked in the days when links were 64Kb, fractional T1 or T1 speeds. For interactive applications, it often worked much better than today’s internet.

- Those of us most capable of diagnosing the problems have tended to opt for the higher/highest bandwidth tier service of ISP’s; this means we suffer less than the “common man” does. More about this later. Anytime we try to diagnose the problem, it is most likely we were the cause; so we stop what we were doing to cause “Daddy, the Internet is slow today”, the problem will vanish.

- It takes time for the buffers to confuse TCP’s RTT computation. You won’t see problems on a very short (several second) test using TCP (you can test for excessive buffers much more quickly using UDP, as Netalyzer does).

- The most commonly used system on the Internet today remains Windows XP, which does not implement window scaling and will never have more than 64KB in flight at once. But the bufferbloat will become much more obvious and common as more users switch to other operating systems and/or later versions of Windows, any of which can saturate a broadband link with but a merely a single TCP connection.

- In good engineering fashion, we usually do a single test at a time, first testing bandwidth, and then latency separately. You only see the problem if you test bandwidth and latency simultaneously. None of the common consumer bandwidth tests test latency simultaneously. I know that’s what I did for literally years, as I would try to diagnose my personal network. Unfortunately, the emphasis has been on speed; for example, the Ookla speedtest.net and pingtest.net are really useful; but they don’t run a latency test simultaneously with each other. As soon as you test for latency with bandwidth, the problem jumps out at you. Now that you know what is happening, if you have access to a well provisioned server on the network, you can run tests yourself that make bufferbloat jump out at you.

I understand you may be incredulous as you read this: I know I was when I first ran into bufferbloat. Please run tests for yourself. Suspect problems everywhere, until you have evidence to the contrary. Think hard about where the choke point is in your path; queues form only on either side of that link, and only when the link is saturated.

Coming installments

- How and why I believe bufferbloat helped trigger the current network neutrality debate, due to misdiagnosis of the root cause of bittorrent problems, combined with Windows XP limitations

- Why non-carrier VOIP and Skype are so poor

- 3G networks – why your smart phone’s performance is often horrible

- Bufferbloat in corporate networks

- ISP’s and bufferbloat

- “Fat” subnets (e.g. 802.11 wireless)

- Mitigation strategies you can immediately apply (sometimes)

- Worrying trends (why I lose sleep at night)

- RED and it’s (current) limitations

- Real solutions, and where research is needed

- Network meltdown, modern variety

Acknowledgements

My thanks to the many who have helped cracking of this case, including Dave Clark, Vint Cerf, Vern Paxson, Van Jacobson, Dave Reed, Scott Bradner, Steve Bellovin, Greg Chesson, Dick Sites, Ted T’so, and quite a few others. And particularly to the ICSI Netalyzr developers, without whose work I’d still be wondering if what I saw at home and in New Jersey were a fluke.

Conclusions

All broadband technologies are suffering badly from bufferbloat, as are many other parts of the Internet.

You suffer from bufferbloat nearly everywhere: if not at home or your office, then when you travel, you will find many hotels are now connected by broadband connections, and you often suffer grievous latency and jitter since they have not mitigated bufferbloat and are sharing the connection with many others. (More about mitigation strategies soon). How easy/difficult to fix those technologies is clearly dependent on the details of those technologies; full solutions depend on active queue management; some other mitigations are possible (just set the buffers to something sane, as they are often up to a megabyte in size now, as the ICSI data show), as I’ll describe later in this sequence of blog posts.

Bufferbloat is a serious, widespread problem, the full severity of which will become clearer subsequent postings.

Let’s go fix it, together.

December 6, 2010 at 8:08 pm |

Is this exclusively about latency, or could it also explain situations where a large file transfer initially saturates the “last hop” link, but slows down to ~10% of theoretical bandwidth after a few megabytes are transferred, and stays that way until completion?

December 6, 2010 at 8:14 pm |

I’d have to see data to know (I’m not volunteering to go look at yours either; I have plenty of fish frying). I’ve seen high packet loss rates at times, but I haven’t caught anything like 90% loss rate in my experiments.

Certainly Powerboost (and similar features from other broadband providers), don’t make a 90% difference in bandwidth performance; they might get you temporarily a factor of 2-5 more than your provisioned bandwidth at most.

What is your service, by whom?

And you may need to take some traces to really see what is going on.

December 6, 2010 at 8:58 pm |

@Zack — packet drops (as perceived by TCP) will cause bandwidth to strangle, and bufferbloat definitely causes the perception of packet drops.

December 6, 2010 at 9:01 pm |

It’s not that simple, with a modern TCP: fast retransmit and SACK can paper over a lot of sins. But there may be circumstances where things go badly wrong. I suggest you take a packet capture, and see if you can get someone with real TCP expertise to take a look at it.

December 7, 2010 at 11:00 am |

Unfortunately, this was happening at my previous apartment, in a different city, with a different ISP, and doesn’t seem to be happening now. If I see it again, though, I’ll get a packet trace.

December 6, 2010 at 9:26 pm |

“The most commonly used system on the Internet today remains Windows XP, which does not implement window scaling and will never have more than 64KB in flight at once.”

For what it’s worth, Windows XP supports TCP window scaling (and timestamps), but it’s not enabled by default. More info at: http://support.microsoft.com/kb/224829

December 6, 2010 at 9:54 pm |

Yes, you are entirely correct. Of course, editing your registry on Windows is hazardous to your machine’s heath, so few enable it. It’s mostly interesting as it bears on why bufferbloat (and problems it has caused) has gone so long before widespread diagnosis, as future posts will make clear. It is also why I tend to lose sleep at night: the traffic is finally shifting away from old TCP’s and XP finally retires, and I worry about the problem becoming more severe.

January 8, 2011 at 1:36 pm |

Actually, even with the default setting, Windows XP will use large window scaling if it receives a SYN packet with the window scaling option marked.

January 8, 2011 at 2:43 pm

Most traffic is initiated by Windows XP, given its (finally dropping) dominance on the net. So correct me if I’m wrong, but that tells me that we’ll still see most XP initiated TCP sessions running without window scaling.

December 6, 2010 at 11:10 pm |

Linux has traffic shaping capabilities that can be used to work around this problem. My home setup involves a linux router sitting in front of the DSL modem. traffic from the router to the DSL modem is rate limited to a couple percent slower than then actual DSL link speed, so that buffering will occur in the router rather than in the modem. Then, one can configure buffering behaviors in the router:

– limiting buffer size, to control latency;

– fair queuing (typically SFQ in linux), so that individual high throughput connections might still have high latency but they at least won’t impact the latency of lower throughput connections;

– or, any combination of the above strategies.

Really, the linux traffic shaping stuff is very powerfull and underused. I wish broadband hardware manufacturers could all do something similar in their hardware (even better if they’d make it configurable, of course).

December 6, 2010 at 11:16 pm |

Yes, it does, as I’ll explain detail in a future post. OpenWRT variants such as gargoyle do this. It doesn’t require hardware at all, just use of existing facilities (though RED also has some problems, as I’ll also cover). This is the mitigation I’ve referred to in my post. But as the posts are long enough as it is, I didn’t want to try to cover that immediately.

Note that classification is not sufficient, you also need to run some form of AQM, or you’ll still have problems.

However, it’s clear they aren’t doing everything they should: such as running (G)RED on the local routing.

January 8, 2011 at 1:47 pm |

Interestingly, my friend Shane Tzen observed this problem about a decade ago and wrote up specifically about using traffic shaping strategies with TCP to avoid a problem: http://www.knowplace.org/pages/howtos/traffic_shaping_with_linux/network_protocols_discussion_traffic_shaping_strategies.php (look at the section title TCP).

December 7, 2010 at 12:18 am |

I’ve also been aware of this problem since May 2009, when I noticed that high latency was correlated with a saturated upload link. Initially I thought it was something BitTorrent specific, what with the 100+ connections, but it was just a wild guess. The key moment for me was when I realized that even a single uploading connection as with a speed test was capable of increasing the latency of the connection. At that point I understood why, because all of the QoS related information out there for systems like OpenWRT and Tomato mention the buffering issue as something that has to be worked around for the QoS to be able to provide good latencies for high priority packets. I’m amazed at how much more time you had to spend to diagnose this, but I’m happy you’re taking up the cause, and I look forward to your advice for mitigating it, given the level of detail you’ve put into this post.

December 7, 2010 at 5:18 am |

The time has been spent mostly looking elsewhere than in the broadband link; that was clear quickly as soon as I had traces and saw the Netalyzr data.

Since it quickly became clear the problem is much more widespread than the broadband edge network, the time has gone into building a strong enough case that I now hope everyone with stop and think deeply about whether their piece of the Internet system suffers from bufferbloat. Dave Reed tried to warn everyone over a year ago about bufferbloat in 3G network systems, and despite his deep expertise in Internet technology (he’s a co-author of the famous “end to end” design paper), ended up not “making the case” well enough to convince the jury. Some have axes to grind.

The immediate reaction I’ve received on quite a few occasions, including in my own company, has been incredulity.

Just to give a small sample of what I’ve heard over the last few months. It helps that I’ve had a bit of success with this quest; I know of at least one product we’ll be shipping which will work well rather than badly, having had a bloatectomy. And that device will therefore likely work much better than its competition; I certainly hope it does well when it reaches the market.

December 7, 2010 at 12:19 am |

I just posted this on LWN. But I was afraid you’d miss it:

This is freaking fantastic.

It’s really really cool beyond belief that you (Gettys) figured it out.

I mean seriously amazing stuff. No question about it.

I terms of technical insight and investigative ability this was a HUGE hit out of the ballpark. Way out. You not only got a home run, you hit it over the stands, past the parking lot and it’s bouncing over the highway as we speak.

Internet history in the making. No question about it at all.

Beyond belief you deserve the gratitude of, well, anybody with high speed internet access to the internet.

Words escape me. All I can do is shake my head in awe. Completely awesome.

Kudos.

December 7, 2010 at 5:05 am |

Thanks; but I think you are too kind.

Many of the puzzle pieces were handed to me (unassembled) by Comcast.

As always, we are on the shoulders of other giants: the area of congestion management was explored with a depth of understanding I admire deeply by the likes of Van Jacobson, Sally Floyd, and many, many others. If I’ve done anything important here, it has been recognizing that the problem is occurring in other parts of the end-to-end system than “conventional” internet core routers, where it was pretty fully explored in the 1980’s and 1990’s.

And chance is very important: aiding me was knowing some of the players here, so that when I smelled smoke, they could diagnose the fire, giving me the confidence to dig deeper and look further. So in part, it’s being in a particular place at a particular time.

December 7, 2010 at 12:58 am |

I haven’t dug to deeply into this but I wonder if the variants of tcp that use increases in round trip times as an indication of congestion would avoid this problem.

December 7, 2010 at 1:35 am |

I’ve been working in the area of video streaming over TCP for a number of years. In the course of that work, I’ve noticed some of the pathologies in last-mile broadband access too. A lot of time, they seem to be due to shapers that appear to be applied probabilistically. It seems to me that if you are a long fat TCP flow, odds are high you will be lumped into the “smells like bittorrent” category of the “traffic management” gear of the ISP.

When that happens, the shaper kicks in, and the buffering is horrendous.

For a kind of crazy workaround, you might find a paper we published in the ACM Multimedia Systems 2010 conference to be entertaining:

http://www.mmsys.org/?q=node/24

In particular, we designed an automated failover mechanism into our protocol above TCP, called Paceline. Basically, when TCP delay goes off the chart, we kill the connection and continue on a fresh one. I usually explain this as based on the human behavior that is the ‘stop-reload’ cycle everyone does when their web browsing session stalls. Only in Paceline, we automate it. It was not designed to address the above shaper issue, but I’ve noticed that it often does so in practice. The connections will failover for a few seconds, and then one will seem to break free of the shaper and be good to go for tens of seconds or even minutes. I had a good chuckle when I first noticed it in action. 🙂

— Buck Krasic (University of British Columbia)

December 7, 2010 at 4:54 am |

Yuck. Engineering around brokenness. Let’s get the brokenness fixed… Or the kludge tower that is the Internet will teeter yet more, and someday we’ll fall over (something I now actually fear, as I’ve alluded to in my posting and will discuss in more detail soon).

December 7, 2010 at 10:49 am |

There is one other thing I meant to mention in my earlier post.

You may be aware of this, but it is important to remember that TCP’s window size is the maximum of 1) receive buffer size, 2) send buffer size, 3) bandwidth delay product determined by the congestion control algorithms. When buffer sizes get massive, it is very possible that 1) or 2) will be smaller than 3), so you could say the effect is to “turn off” congestion control in elephant flows most of the time. From their point of view, they are in the LAN like ACK paced mode, they simply send data on receipt of every ACK, and leave the actual rate determination to lower network layers. I have long suspected/wondered whether whoever engineered current traffic management practices has done this by design, the goal isn’t to “break” congestion control, but instead to re-assign responsibility to a different entity, from end-host to ISP managed devices–broadband modems and traffic management gear.

You have done a lot of good measurements. I’ve found some insights through end host instrumentation, specifically I’ve wired up some of Linux’s TCP_INFO sockopt statistics (buffers sizes, window size, rtts, rto’s etc.) to a user level trace tool (of my writing). Watching the actual values used inside of TCP is quite informative.

As for the kludginess of Paceline, yea well “kludge” vs “pragmatic, balanced and elegant solution given the context” is always a subjective assessment. 😉

December 7, 2010 at 1:46 am |

Hi Jim,

Thanks for delving into this! I’ve been wanting to get to the bottom of this ever since writing the Linux Advanced Routing & Traffic Control HOWTO.

I indeed noticed this problem way back when in 1999 or so. You correctly note that the blame should fall on equipment manufacturers, but back then consumers did not have any choice in the matter. You got the equipment your cable company or DSL provider selected for you.

Also, at the time, there was a huge and almost exclusive focus on ‘DOWNLOAD SPEED’, and modems were clearly optimized to generate as much of that as possible, disregarding any latency impact.

About raising a stink in the IETF, I don’t know. At the time I did not see the (European) Internet Service Providers I was working with interact with the IETF much.

But anyhow, let’s hope something happens now. In the meantime, http://lartc.org/wondershaper gives you control of the queues again.

Bert

December 7, 2010 at 4:42 am |

Certainly the ISP’s share the responsibility for the problem with equipment vendors; the monomaniacal focus on bandwidth has cost us all tremendously, and we need to change the conversation from solely bandwidth to some bandwidth/latency metric to make progress. We have to change this to a competitive situation to make quick progress. Without shining the light of day onto the problem and turning it to a competitive situation, bufferbloat won’t get eliminated in finite time.

Van Jacobson pointed out to me that the problem goes back a long way, when DARPA walked away from funding most network research over a decade ago: this left research in how to handle dynamic range of bandwidth completely in the lurch; NSF has primarily been interested in just “go fast” to connect scientists to super computers. So nobody has been minding the store, and doing research on many orders of magnitude of differing performance is far from a fully solved problem and needs serious research. Dynamic range of adaptive behavior is as hard as absolute performance; we’ve only been looking at absolute performance for over a decade.

As to the IETF, even in 1997, when I was still working in HTTP extensively, there was enough representation that the word might have spread; the Nordic countries were already very clue full in particular. The IETF is both very similar and slightly different from the FOSS community (having some of the shared heritage having been spawned out of the academic research communities decades ago). There was certainly heavy representation from all the equipment manufacturers. Somehow we need to break down the barriers that have somewhat separated the communities, as there is much that can/should be shared.

And yes, mitigation of bufferbloat is (partially) possible via Wondershaper and techniques like that which Paul Bixel is attempting in his recent work in Gargoyle (I haven’t yet tried them out, but hope to soon). Just remember that the problem is more general, and not confined to the router/broadband hop; we also have to fix even local traffic (to your storage and other boxes at home), as my experiments show.

And the base OS’s all have problems to some degree or another. We have a mess everywhere, and be careful with stones…

December 21, 2010 at 5:00 pm |

Hi Bert,

The Wondershaper should be updated to use the TC options “linklayer” and “overhead”.

As this solves the issues of “reducing” the bandwidth to achive queue control, as these options (eg. linklayer ADSL) takes ADSL overhead and framing into account.

The options (which I implemented) are included in mainline Kernels since 2.6.24 and in tc/iproute2 in version 2.6.25.

Guess the word have not been spread of this (now old) option… sorry about that.

–Jesper Dangaard Brouer

January 7, 2011 at 8:18 pm |

Is there documentation for the new options? Everything I can find seems to be a few years old (HOWTOs, Documentation in the kernel source, man pages, the lartc.org site, etc.).

January 7, 2011 at 9:36 pm

Ugh. Yeah, that’s one of the real headaches.

Much of the “tuning” information I’ve seen is both out of date, often now completely broken, superseded by other problems (e.g. classification may be completely ineffective if your device driver is doing buffering underneath you), and mostly to “go fast” for supercomputers, and not what most users want/need. This is part of why I think real solutions should “just work”; expecting everyone to figure out the right “default” is a recipe for failure.

I need to turn on a wiki I have set up to help with this problem and have a place for everyone to work together on this. Maybe next week. Getting Slashdotted today hasn’t helped.

December 7, 2010 at 4:46 am |

Along different TCP Congestion algorithms available in Linux there is one estimating buffer sizes in devices along the path. I just cannot remember which one.

December 7, 2010 at 4:51 am |

That may be somewhat useful in some circumstances; however, as you’ll see from a future post, I’m skeptical of the “change TCP” approach.

February 3, 2011 at 12:41 pm |

TCP vegas is the one you are referring to.

It does not compete successfully with other TCP/ips. Further, it appears to be be confused by the the number of retries in a modern wireless connection to mis-estimate the length of the path. (resulting in a slowdown).

TCP veno has some potential.

December 7, 2010 at 7:42 am |

This blog pots explains a lot. Recently I upgraded my cable internet connection from 2 to 10 Mbps and also switched from Windows XP to 7, and I’ve experienced incredible sluggishness and outright connection resets when downloading so much as 1 file that saturates the pipe. The one file I’m downloading with wget comes along really nice at a steady 1.1 MB/s, but all the other connections that I have open (like ssh and irc), reset within 30 to 60 seconds of starting the download.

My cable modem is a crap ass Motorola Surfboard fwiw.

December 7, 2010 at 9:02 am |

Yes, you’ll see more problems having switched to Windows 7, due implementing window scaling by default. Exactly how bad things can get, I don’t really know; I haven’t seen connection resets in my controlled experiments, but have seen DNS lookup failure.

How much pain you will suffer depends on the cross product of buffering amount and bandwidth (in each direction).

I have no data on which modems may be “good” or “bad” in terms of buffering.

I do have a SB6120 myself; tomorrow’s post will be how I’ve mitigated most of the pain in my broadband hop. I’m quite happy now…

December 7, 2010 at 7:50 am |

Also, I’ve noticed that manually limiting the download speed results in oddly fluctuating speeds. I’ve seen this with both LeechFTP and wget — I’ve tried limiting the download speed to 800 kB/s to avoid having my other connections reset, and the download speed fluctuates between 100 and 1000 kB/s on my cable modem. On an ethernet connection at work (presumably a fiber optic link without bufferbloat at any point) everything downloads at a steady 800 kB/s when I try this.

December 7, 2010 at 9:06 am |

This may not be a “real” effect you are seeing; the fluctuation may be primarily in the accounting. If you look at my traces, you’ll see bursts of dup’ed acks in them and bursts of SACKS. Most data did not get dropped, but the acks certainly end up getting piled together. TCP gurus can better explain what’s going on; I’m not such a guru.

December 7, 2010 at 7:52 am |

Hi Jim,

When I upload large files over my cable connection, I always see it go in bursts with a period of about a second. I had assumed that this was something inherent in the way the cable system imposed my upstream bandwidth limit, i.e. it was setting a quota of bytes per second. Now I suspect that the data on the cable is going at a constant rate and the 1 second burstyness is a function of the buffer size in my modem.

So the question is, what can be done about it? All of my network gear has some sort of web interface where lots of things can be tweaked, but I’ve never seen anything to change buffer sizes. I wonder if it’s possible in principle to change the buffer sizes in typical devices by changing the software, or whether the buffers are at a lower level in the hardware?

Anyway, I look forward to your future posts.

December 7, 2010 at 9:03 am |

As I’ll discuss in tomorrow’s post, you can avoid the broadband bufferbloat with some home routers. Of course, as I showed in a previous post, the home routers themselves may also have problems.

December 7, 2010 at 7:53 am |

[…] morning I read an interesting article on the subject by Jim Gettys, and the problem seems to be worse than anticipated. You can read more […]

December 7, 2010 at 8:07 am |

I have been reading your posts about buffer bloat with interest.

One of my friends identified this problem in 2004 and fixed it using traffic shaping, just like some of the other commenters. http://www.greenend.org.uk/rjk/2004/tc.html

If you are running Linux, have a look at its pluggable congestion control algorithms. See . Some algorithms rely on RTT measurements rather than packet loss for feedback on congestion. Try changing to TCP-vegas and see if that solves the problem.

December 7, 2010 at 8:08 am |

Sorry, broke the linux link – see http://fasterdata.es.net/TCP-tuning/linux.html

December 7, 2010 at 9:13 am |

Actually, much of what that link describes is *exactly* the kind of bandwidth maximization tuning that got us into this mess, and is often obsolete information to boot. For example, at this date Linux automatically tunes its socket buffer sizes, making a class of “optimization” obsolete (along with some of the reason for some of the buffering).

What is more, as I said in a previous post: *there is no single right answer* for buffer sizes. The challenge is how to do buffer management in a fully automatic way. More about that to come…

December 7, 2010 at 9:08 am |

Yes, that’s tomorrow’s fodder indeed. I can only write and edit so fast.

December 7, 2010 at 8:39 am |

yes indeed here’s a partial solution

http://paravirtualization.blogspot.com/2010/11/terrible-internet-buffer-overrun.html

December 7, 2010 at 9:19 am |

Jon, you are a man after my own heart….

And I certainly hope it doesn’t come to your jocular “The Terrible Internet Buffer Overrun Disaster of 2012”, though I have been losing sleep over it. Destroying TCP’s congestion avoidance algorithms is a recipe for disaster.

December 7, 2010 at 8:48 am |

I’ve been arguing with Verizon in the UK for the last year about insane RTT times on our E1. They always pointed at over-utilisations, but it just didn’t make sense to me that RTT would be knocked to pieces by a single FTP session. As you mention in the article, for a while now the whole network has just ‘felt’ wrong in a way I struggled to explain, but knew wasn’t right. Also as you suggest, I had pretty much given up the ghost on working it out and have just been throwing bandwidth at the problem, with very limited sucess. But reading this, suddenly it all makes sense. Not sure where to go from here, but at least I know I’m not mad.

Looking at your quoted comment above “but dropping any packet is horrible and wrong” I can’t help but think how many ISP SLA documents have specific compensation clauses about levels packet loss. Would it be fair to suggest that this builds in an inherant motivation for the ISPs to increase buffer size in order to prevent packet loss and therefore reduce compensation payout? Even if by doing so they break the network? Note, I’m not suggesting this is a macheavelian plot, but simply an unintended consequence of how ISP contracts are written.

December 7, 2010 at 9:22 am |

I suspect SLA’s should have some packet level loss clause.

What is missing, as in the public broadband tests, is test of latency under full load. We’ve focussed on bandwidth so long we’ve forgotten latency.

And yes, Petunia, if we (re)build the Internet properly, we can have our cake and eat it to. This shouldn’t have to be one or the other, but not both….

December 7, 2010 at 9:38 am |

I just had a look at the SLA for our E1. Interestingly, we get a direct commitment to packet loss based on a percentage per month from ingress to the network (i.e. our managed router) to the point where they hand it off to the next provider or destination, but there doesn’t appear to be any exception for over-uttlisation. There is also a latency SLA, but this only applies across the core network, not the last mile. So if my local tail is buffered up to the eyeballs and causing latency, but not dropping packets, neither SLA will kick in. This structure makes sense when the average throughput is lower than the total bandwidth, as was typically the case historically. But in the modern age when any pipe can be filled no matter how fat it is, it doesn’t make sense any more. Before any technical fix can be applied, the ISPs need to alter their SLA structure so packet loss from over-utilisation is exempted from compensation, otherwise any attempt to fix this will just trigger lots of invalid payment to customers.

December 7, 2010 at 10:23 am

Normal TCP loss rates for signaling congestion is much lower than that I observe; I expect there is a point in the middle that will work. And ECN is another option that needs exploration.

Jim

December 11, 2010 at 5:11 pm |

…but jitter is often mentioned in SLAs.

December 7, 2010 at 1:06 pm |

Note that Verizon has made the same mistake as Comcast, as has AT&T, the hardware manufacturers, the operating system folks, and so on. We’re all living in a glass houses, so be gentle and leave the stones behind; go forth and educate…

Telling everyone “they are stupid”, or “they screwed up”, when it’s “we were complacent”, and “we all screwed up” won’t be at all helpful; it is why I chose the title I did for this posting. At some point late in this process of blogging, I’ll show bufferbloat in application software as well, just to complete the journey. It’s “we” who have made/are making this mistake.

Certainly, there may have been unintended consequences of SLA contracts; but as the last SLA I ever worried about was about 15 years ago, I’m hardly someone to comment on on the perverse incentives that may have entered the system.

December 7, 2010 at 10:31 am |

See Nagle’s RFC 970 “On packet switches with infinite storage”. Even in 1985 the early roots of this problem were visible.

Note also that Nagle suggests dropping the *last* packet in the host’s queue when one must be dropped. If we want drops to produce rapid feedback, dropping the *first* one in the queue would notify the receiving host earlier that there’s a problem.

December 7, 2010 at 12:02 pm |

and RFC 896, also by John Nagle, is worth reminding yourselves of: I’ve been alluding to congestion collapse, and we all need to remember what was said in 1984 as I move onto that topic….

Right, of course, is in the eye of the beholder: real AQM (RED or something better; classic RED has not one, but two bugs, according to Van when I talked to him in August) is also better than head drop, as the queues never grow to such a huge size (remember, they are now often orders of magnitude bigger than they should be, and there is no “single right answer” to the question). I’ll move onto that topic soon as well.

December 7, 2010 at 10:53 am |

One of the strangest support calls we got at PSINet was “web pages load 1/2 way and stop” which was tracked to a bad buffer on a router on our network. My home lab has the docis 3 modem load balanced with fios 20/20 using Vyatta software router. Be interested in the mitigations

December 7, 2010 at 12:05 pm |

Tomorrow. Today’s posting will be a couple areas where I know the issue is/has affected the network neutrality discussions. Since they are ongoing, I want to inject a bit of insight (and opinion) on that topic, and feel I can’t take my time and come back to it later and have the observations illuminate the discussion (whatever side of the debate you may be on).

December 7, 2010 at 11:59 am |

Isn’t this precisely what the Internet is suppose to do?

a) Never tell the application to stop sending, i.e. no back pressure, if the application has stuff to send, let it send;

b) treat everything equally giving no special treatment to packets. What the net neutrality types are always crying for.

Now this may not be the behavior that is desirable but it seems to be in line with what has been advocated for many years.

December 7, 2010 at 12:59 pm |

Heh.

My view on NN is that the network is supposed to do what I ask it to (it’s what I’m paying my ISP to provide service for and my money may need to go further than my immediate ISP in the form of traffic exchange agreements, at times), and do it with some fairness when sharing is required. Note that I personally don’ t have problems with paying extra for premium performance at busy times of day (which is why it makes me sad that the best mitigation for bufferbloat right now defeats Comcast’s Powerboost, which, in internet tradition, is trying to give me extra performance when it doesn’t cost them extra).

It’s having others make yea or nea decisions on what I can access and/or get decent service for that makes my hackles rise immediately and will get me all worked up on the topic. I should be able to choose the service I get, and without the “bundling” disaster that has made me pull the plug on cable TV.

Having a network which is neither fair under load, and causing operational nightmares for users and ISP’s alike is a jointly losing strategy. That’s were we are today. A Lose Lose situation, if ever there was one.

December 7, 2010 at 12:08 pm |

One possibility that’s been proposed is RAQM (REMOTE Active Queue Management, http://www.cs.purdue.edu/homes/eblanton/publications/raqm.ps ):

Namely, that by doing delay based estimation it becomes possible to divorce the point of control (the ‘congestion notification’, aka, where to drop the packets) from the bottleneck, allowing you to get active queue management even when the queues don’t support active queue management.

Thus a properly equipped in-path device (like a WRT system) could kludge-fix ALL the paths going through it for buffer problems, without needing to be the bottleneck itself (unlike conventional traffic shaping).

This will fail if there are some flows through the bottleneck that aren’t controlled by the RAQM device, but otherwise should work very well.

And there also is a 90% solution in queue engineering: queues sized in delay rather than capacity.

If a queue is considered ‘full’ if the oldest packet is > 200ms old, this will still allow cross-the-world good bandwidth (you need a minimum queue size of ~bandwidth*delay/sqrt(N), so with 200ms US to Europe pingtimes, 200ms is big enough for most).

This is NOT optimal (the optimal size should be based on measured RTTs and dynamically changed), but its at least in the right ballpark: you still get full rate TCP throughput on reasonable cross-the-planet links, and you add a maximum delay of 250ms latency in the worst case.

December 7, 2010 at 12:38 pm |

Certainly the good is the enemy of the perfect; far be it from me to tell people to not do something less broken than they currently do. I’m often seeing latencies in seconds, getting to 200ms would be a serious improvement. And RAQM may help mitigate the problem while we fix all the broken gear properly.

I will point out that, however, we can’t stop at 200ms (a number that networking people seem to like, as it is convenient and achievable with little thought or hard work). The reality of human interaction and the speed of light is that *any* additional unnecessary latency is often/usually too much. As a UI guy, my metrics have always been (since I learned this stuff first hand in the 1980’s), that to:

Given that vertical retrace at 60hz puts you statistically almost behind from the get-go (on average, you’ve lost 8ms right there, even with a really good OS and scheduler), and most paths are 10’s to a hundred milliseconds and we can’t repeal the speed of light, the problem is harder than most in the networking community tend to acknowledge. Even a gigabit switch when loaded may insert significant latency due to buffering.

I see latency as one of the great challenges for the networking/OS community. It’s probably as difficult as the “go fast” problem, and we want an internet that does both simultaneously without tuning, under load.

Lest people think this is unrealistic, I’ll point out that my rubber banding experiments were on a Microvax II on a 10Mbps ethernet in 1985; we got to 16ms over the X protocol over TCP on that local network then; it required that to get client side rubber banding to “feel” physically attached to the hand when running remotely. While client side java/javascript has relaxed the need some, it hasn’t gotten rid of all of the need, and the typing perception requirements are still necessary (as Google Instant has shown).

Latency you never, ever get back.

December 7, 2010 at 4:55 pm |

Here’s the reason why so many dirty-network types like me want to say “200ms and done” (Jim knows this, its more just for the record in general):

Its because that anything less than 200ms REQUIRES that every bottleneck in the path implement traffic classification and prioritization.

It is impossible to have simple queues which satisfy the requirements of both LPBs (“Low Ping Bastards”: any application with strong realtime components like first person shooters, VoIP, etc) and sustained TCP throughput at the same time.

Thus the choices are either a compromise that produces the maximum benefit for both types of traffic (~200ms is a very good answer, and RAQM can easily do this) or do a forklift upgrade on EVERY bottleneck in the Internet to do multiple-queue traffic prioritization.

January 4, 2011 at 6:14 am

@Nicholas Weaver:

You seem to be stuck with legacy information. Achiving low (zero) loss, low (<0.98) goodput is indeed impossible without a major rehaul of deployed congestion control and without seperating (corruption) loss from congestion feedback.

You may want to learn about DCTCP (based on alpha/beta ECN TCP aka ECN-hat), virtual queues (deployed in ATM but that technology became too costly) and CONEX (re-ECN) which provides the basic signalling foundation to implement all that goodness.

Regards

December 7, 2010 at 5:19 pm |

And I no longer think there is a good excuse not to do full AQM going forward: the cost of a dual issue gigahertz SOC with embedded NIC is in the 1 Watt power dissipation, and costs no more than $!5 (right now). So something fully capable of “working right” implementing full AQM should be in hardware/software/fimware designs going forward, IMHO, and we can mitigate the problem to the of order 200ms as best we can in the existing plant until it gets swapped out. That I seek the ideal while also wanting the good ASAP is not a contradiction in my view.

This is why I’ve been talking both about mitigation and solution to the buffering problem, rather than a single “fix”.

I *really* don’t want people to leave under the impression that 200ms is good enough. Many don’t understand the UI realities that exist who haven’t worked in the UI field. And there is a market for equipment that doesn’t just work OK, but works well. I see it as a market opportunity for equipment vendors that solve the problem properly.

December 7, 2010 at 4:09 pm |

You should be running into bigtime problems anytime you saturate your upstream on a cable modem shouldn’t you? That is the innate behavior of the two-wire topology.

December 7, 2010 at 4:16 pm |

No, if buffering is correct in a system, two (or more) TCP sessions should fairly share the link just fine even with the connection saturated. You should never be seeing latencies of the order we’re getting.

December 7, 2010 at 4:55 pm |

I don’t have the expertise to know if you’re right or not, but I’ve been noticing these sorts of issues for most of the last decade on and off, occasionally musing: TCP has features built in to avoid these problems, doesn’t it? Why don’t they work!

Recent experiences streaming random internet video to recent windows devices on a (locally) quiet network have been equally confounding. The video is supposed to degrade seamlessly, but instead I get sputtering high quality video — maybe the players are written wrong, but I’m suspicious.

I don’t quite have the expertise to judge, but intuitively this makes sense. Fortuitously there should be a much more hackable home router on my desk tomorrow. I shall follow along eagerly.

December 7, 2010 at 5:06 pm |

A couple of observations:

– there are a number of ‘rules of thumb’ which call for rather big buffers (google Villamizar tcp buffer size).

– TCP congestion avoidance works under the assumption that bit error- induced packet loss is very rare. This is true for optical networks, but less true for copper. Wireless is extremely lossy. The link layer has to do some error correction or TCP would never get up to speed. This comes at the prize of some buffering

December 8, 2010 at 7:51 am |

Hi Jim,

For someone who’s always been affected by the symptoms you describe here (thanks to the severely limited upstream links we have in Brazil), this was a very enlightening read. Thanks a lot!

Recently, though, I switched providers (also switching from cable to DSL) and bought the *cheapest* modem I could find. Much to my surprise, since then I seem to be able to upload at full-speed (which is still rather slow; I have a 400kbps uplink) without rendering the downlink unusable (as has always been the case). Now I’m wondering that maybe to cut costs on the modem they used a small buffer, which in turn doesn’t trick the TCP congestion avoidance algorithms. I guess that’s a possibility?

December 8, 2010 at 9:42 am |

The problem with this theory is that you can’t even buy “small enough” DRAM chips these days for the “right size” buffers (not that there can be any “right size” in the first place, AQM is the “right” solution, as I’ll discuss later).

Most likely it was cheap because it was an old design where memory had been significant cost issue, or the designer’s firmware wasn’t riddled with bugs they were papering over (a common cause of buffer bloat, since latency has not been tested for properly, and if they don’t meet bandwidth goals, they don’t get certified by carriers for use). Measure the bandwidth you get, and the saturated latency, and you can easily compute the buffer size.

December 9, 2010 at 6:42 am |

You might be interested in the 2007 paper

Marcel Dischinger et al, Characterizing Residential Broadband Networks

(available at http://broadband.mpi-sws.org/residential/)

which uses an interesting test methodology to separately measure up- and downlink, and reports queuing delays of several *seconds* on some cable modem uplinks.

December 9, 2010 at 7:53 am |

Thanks.

Note I had problems with that link, but found it at http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.65.6825&rep=rep1&type=pdf

A quick glance shows inconsistent use of RED as well in it.

December 9, 2010 at 12:34 pm |

Great analysis.

I was running into this with a 128kbit/s uplink I had once. The solution I used was to put a Linux box in front of the modem, capping my bandwidth at 100kbit/s using a tiny queue, effectively taking the modem’s queue out of the equation. I couldn’t do much about the downlink, though, except try to manage TCP ACKs.

I guess this is a bit of a tangent, but what you say about overly clean networks is often observed when tunneling TCP over TCP, as in the not uncommon “VPN over SSH” situation. Saturating such a network will tend to bring everything to a halt, since no packets are dropped in the upper layer.

Avery Pennarun is solving the SSH VPN issue in his sshuttle project: http://apenwarr.ca/log/?m=201005#02

December 9, 2010 at 9:50 pm |

I see you have mentioned this elsewhere, but my first reaction to reading this was “where art thou, ECN?”. This is the very problem ECN is meant so solve. Yet we don’t implement it because the short term pain may be high.

I get IPv6 deja-vu when I think about it. But unlike IPv6, there is no d-day coming. I suspect that rather than fix wear the one off pain and the problem with ECN, we will just let the water warm up until it becomes intolerable, then tweak a few queue lengths until it becomes just tolerable again. If there isn’t a collective effort put in by a few big players, that is where we will sit for time immemorial.

Oh, and for those thinking implementing QOS at home will fix the problem for you – it will only do that if you are the cause of the congestion in the cloud. If someone else is filling up the queues in an upstream router nothing you can do can change the latency you see. From Jim’s description, this is the situation he finds himself in.

December 9, 2010 at 10:41 pm |

Steve Bauer at MIT (and probably others) are researching the state of ECN suppression. Until that and/or other research is complete, it’s not clear if we can. A conversation with Steve a month or two ago makes me hope it may be usable and useful in some parts of the network, but the general answer isn’t in yet.

And yes, you can possibly help yourself with QOS locally for your VOIP in a limited environment like your home, but you really still have to manage your queues and get TCP behaving correctly. If you don’t, you run smack dab into congestion someplace.

And as ISP’s aren’t necessarily managing queues, we have lots of messy problems. Others can help by starting to monitor their ISP’s carefully with tools like smokeping (and educating them as to the issues, if they lack clues). In the limited probing I’ve done of Comcast’s network, it’s always been smooth as a baby’s behind, until that last killer mile. Other monitoring I’ve done (particularly from hotel rooms) makes me believe, as the anecdotal and other data suggest, that there are clueless ISP’s out there. I’ll explain next week as to why there has been a reluctance to use AQM.

December 21, 2010 at 2:59 pm |

Jim,

Something else is going on here too.

Here’s another snapshot from your ConPing/210aKnoll,pcap file:

I’m guessing your data was captured directly on the sending machine, and that it has a NIC doing TSO.

The effect should be small, but because of the way you’ve captured the data, none of it can be truly trusted. The TCP RTT plots are measuring latency starting with a bogus TCP segment that hasn’t been actually been transmitted yet. It still needs to be sliced into MSS-sized segments which then need to be streamed onto your LAN.

Yes, serialization delay is low on the LAN, but it would be nice to see this data more accurately.

Do you perhaps have captures without TSO, or (better) taken by a 3rd party to the transaction?

I’ve seen NICs (Broadcom chips w/ tg3 driver) hold onto data for hundreds of ms.

Here’s something else… Around 16:08:50 your TCP session was showing latency continuously over 1000ms:

…but the pings during this same window are much closer to 100ms:

15:08:49.745852 IP 24.218.178.78 > 18.7.25.161: ICMP echo request, id 30925, seq 386, length 64

15:08:49.789203 IP 18.7.25.161 > 24.218.178.78: ICMP echo reply, id 30925, seq 383, length 64

15:08:50.752000 IP 24.218.178.78 > 18.7.25.161: ICMP echo request, id 30925, seq 389, length 64

15:08:50.843154 IP 18.7.25.161 > 24.218.178.78: ICMP echo reply, id 30925, seq 386, length 64

15:08:51.754606 IP 24.218.178.78 > 18.7.25.161: ICMP echo request, id 30925, seq 392, length 64

15:08:51.926162 IP 18.7.25.161 > 24.218.178.78: ICMP echo reply, id 30925, seq 389, length 64

Maybe you’ve already addressed the possibility of priority-queueing small packets… I’m just starting to follow along with your project and am certainly not up to speed 🙂

Would you please post a link to the Comcast provisioning document written by your neighbor?

Thanks!

December 22, 2010 at 10:13 am |

At the time I took that data, I had no good way to take traces except on the transmitting system.

The particular laptops I’ve used to take data have Intel NIC’s, not the broadcom, and I don’t think Linux distro’s are typically doing traffic control games (which maybe they should). But with the current very large transmit rings, from what I’ve gathered in other comments to this blog, the traffic control would be ineffective anyway (until those buffers are cut down to size, as one person posted a patch to do).

My pings in the traces are exactly in line with what I observe from without (e.g. the DSL reports Smokeping data) in magnitude. If you are motivated, it would be interesting to look a bit further into the pings; Van Jacobson noted that since Linux happened to be used on both ends, there is already timestamp data in the traces.

I’ve since bought one of these port mirroring switches, which are quite inexpensive ($150). At some point, it would indeed be better to collect data that way (now that I’m able), and expect to do so before I do formal publication. For the next few weeks, I need to finish writing up what I know, update an overview presentation I did several months ago, and do a few other things, before circling back to try to write more formally and rigorously.

In any case, while I’d certainly like to retake the data before a formal publication, I encourage you to do your own experiments. The Netalyzr data shows buffering is dismayingly common (e.g. Nick Weaver at ICSI immediately reproduced similar results on his home connection, as soon as I made contact with him toward the end of the summer). If anything, the Netalyzr data has underestimated the frequency problem (it’s UDP buffering test wasn’t aggressive enough to fill higher bandwidth connections such as FIOS all the time, and can be confused by cross traffic). Broadband bufferbloat isn’t a rare phenomena (worse luck).

January 8, 2011 at 6:42 am |

Chrismarget,

You suggested that small packets might be priority queued. There has been research conducted on the one-way delay in 3G networks where it was shown that large packets get through faster than smaller ones, at least in Sweden (I guess its dependent on the ISPs hardware). See the links below, there seems to be a threshold at around 250 bytes. The authors claim that reason for this is that the technology used changes from WCDMA to HSDPA around this point, resulting in lower latencies.

http://www.bth.se/fou/Forskinfo.nsf/Sok/73da45afadb2e7cfc12577030030ba80!OpenDocument

http://www.bth.se/fou/Forskinfo.nsf/Sok/6933eb641a36f5b5c12577270026654c!OpenDocument

January 8, 2011 at 9:57 am |

And while there may be reasons to give small packets priority, those reasons don’t include actually solving bufferbloat…

December 21, 2010 at 4:51 pm |

Hi Jim,

I think you would be very interested in reading my masters thesis [1] (http://goo.gl/sBHtg), as it contains pieces to solve your puzzel.

I think you have has actually missed what is really happening.

The real problem is that TCP/IP is clocked by the ACK packets, and on asymmetric links (like ADSL and DOCSIS), the ACK packets are simply comming downstream too fast (on the larger downstream link), resulting in bursts and high-latency on the upstream link. See page 11 in thesis for a nice drawing.

With the ADSL-optimizer I actually solved the problem, by having an ACK queue, which is bandwidth “sized” to the opposite link size. The ADSL-optimizer also solves the issue by ceasing control of the queue, which actually isn’t that easy on ADSL due to the special linklayer overhead (see chapter 5 and 6).

My investigations show, that the major issue is, that TCP/IP congestion protocol was not designed with asymmetric links in mind.

But, there is still some truth in, the ISPs are increasing the buffer sizes too much, which makes this effect even worse.

I guess the real (but impractical) solution would be to implement a new TCP algorithm which handels this asymmetry, and e.g. isn’t based on the ACK feedback, and deploy it on you home machines (as the effect is largest here).

–Jesper Dangaard Brouer

[1] http://www.adsl-optimizer.dk/thesis/

December 22, 2010 at 9:51 am |

Thanks for the pointer to your thesis. I’ll take a look.

While I don’t doubt that there are issues caused due to the asymmetric nature of many of the broadband connections, I also would be surprised if that was the primary issue here (above and beyond I’ve already had real TCP experts look at the data, which I’m not). In part, because I see the same effect on symmetric FIOS service….